Nvidia GPU Computing Quick-Start Guide

Nvidia recently launched their GPU Compute Cloud (GPC) which provides access to their container registry which gets researchers quickly running deep learning containers - whether on their own hardware, public cloud providers, or on premium clouds. There is no cost to access and use the GPC service.

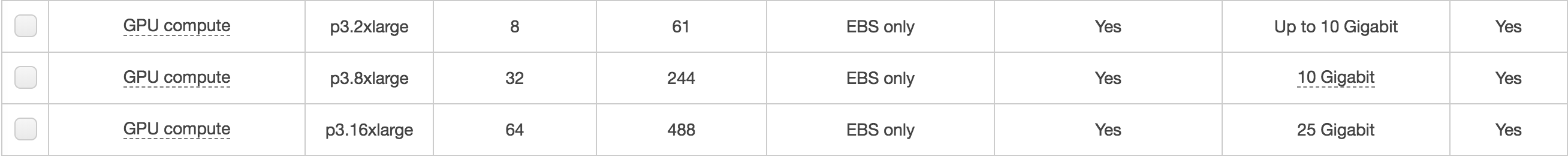

Any ORNL user or project who has requested an AWS account (or has an IAM user with permissions) can launch instances with up to 8 GPUs each and immediately pull and run containers from the Nvidia registry - the same as used on their DGX platform. Containers are updated monthly, and, of course, you can build your own.

Creating the VM(s)

-

Start here: https://ngc.nvidia.com/docs/aws. This page provides a link to the AWS console where you will user your credentials to sign into AWS.

Note: Need AWS account documentation? Click here.

-

A dashboard appears where you can select the service you wish to use. In the search box, type

EC2and click on the option when it appears.

-

In the EC2 Management Console, you may now click

Launch Instance.

-

On the screens that follow, you need to select the following:

-

Once the instance flavor is selected, you can immediately

Review and LaunchorConfigure Instance Details. In this tutorial, we willReview and Launch.

📝 Note: Remember that once you launch a VM you will be responsible for all incurred charges, which will be billed the the IAM user's project-provided ORNL charge number.

Accessing the VM

- After you have clicked

Launchedfor your VM(s), you are prompted to select a key pair. It is recommended to create a new key pair and download the private key. Be sure to store it in a secure location. - To ensure the private key remains secure, you need to change the permissions of the file. You can also move the file to the .ssh folder if you wish.

chmod 400 /path/to/my-key-pair*

mv my-key-pair* ~/.ssh/

- Wait for the VM(s) to launch and for the status checks to complete. You can click

View Instancesto monitor the progress. It should only take a few minutes, at most. - Now you can access the VM via SSH (and corkscrew if starting on ORNL's network).

ssh -o "ProxyCommand corkscrew snowman.ornl.gov 3128 %h %p" ec2-user@<IP-address>

* Depending on the instance flavor, the user should be either `ubuntu` or `ec2-user`.

* Find your instance's IP address on the instance dashboard.

<a target="_new" href="/user-contributed-tutorials/aws-tutorials/aws-deep-learning/screenshots/aws-ec2-ip.png"><img src="/user-contributed-tutorials/aws-tutorials/aws-deep-learning/screenshots/aws-ec2-ip.png" style="border-style:ridge;border-color:#bfbfbf;border-width:1px;width:550px;" /></a>

Using the VM and GPUs

- When first accessing the VM, you probably want to update (for security). Type

sudo yum updateorsudo apt-get update. - Typing

nvidia-smiprovides the System Management Interface and allows you to view the status of the GPU. - Prior to using a Docker container, you must first register for an account with Nvidia here: https://ngc.nvidia.com. Click

Sign Upand complete the registration process. - Once complete, you'll be directed to a page where you can generate an API key. This is required to access the Nvidia container registry. Generate Key

- To set up a container and run a job, start with Docker. Depending upon your chosen VM flavor, this may already be done.

sudo yum install docker # or sudo apt-get install docker

sudo yum install nvidia-docker # or sudo apt-get install nvidia-docker

docker login nvcr.io

* Username: `$oauthtoken` # literally, type this

* Password: `< Your NGC API Key >`

- In this tutorial we will use a PyTorch example and run a MNIST job. (The MNIST database contains handwriting samples.)

- Next, pull a Docker container by typing

docker pull nvcr.io/nvidia/pytorch:17.10 - Then run the container

nvidia-docker run --rm -it nvcr.io/nvidia/pytorch:17.10followed by navigating to and running the example job.

cd /opt/pytorch/examples/mnist

python main.py